Expose your Kubernetes Cluster using Nginx

Recently, I deployed a Kubernetes cluster on a Dell-EMC setup in our office to explore various Kubernetes features. My goal was to experiment with tools like StackGres for PostgreSQL clustering, deploy StatefulSets, and run different Kubernetes workloads. However, I faced a challenge—due to some restrictions, I couldn’t access my VPN service. So, I took an unconventional approach: I exposed the Kubernetes cluster to the public, allowing anyone to access it. This setup opened up new opportunities for experimenting with Kubernetes from anywhere

You can even send me a message to try things out in the cluster; the only authentication required is my approval.

Free, Free, Free! A Kubernetes cluster for free!

Here’s the setup of my cluster:

The random planning:

My first random thought was that Kubernetes handles everything through its API, so there might be some specific port that I could expose publicly using a proxy pass. I then took a quick look at the kubeconfig, which ultimately provided the answer I was looking for.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: [REDACTED]

server: https://10.10.10.100:6443/What I did was change the server address in the kubeconfig to my publicly accessible domain, which is pointed to the reverse proxy.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: [REDACTED]

server: https://myveryveryveryverylongsubdomain.mydomain.com/The first thing i tried:

Being the decent guy that I am, I simply jumped over to my reverse proxy and initially configured it like this:

upstream internal-k8s-expose-temp {

server [k8s_ip:port];

}

server {

server_name myveryveryveryverylongsubdomain.mydomain.com;

location / {

proxy_pass https://internal-k8s-expose-temp;

# include proxy cert and key

proxy_ssl_verify off;

include proxy_params;

}

listen [https_port] http2;

ssl_certificate /etc/letsencrypt/live/myveryveryveryverylongsubdomain.mydomain.com/fullchain.pem; # managed by Certbot

ssl_certificate_key /etc/letsencrypt/live/myveryveryveryverylongsubdomain.mydomain.com/privkey.pem;

# managed by Certbot

}At first, it seemed very straightforward—just ignore the certificate error with the upstream server, and that’s it. I was excited, thinking about accessing this internal cluster from home. So, I switched my device to a mobile hotspot and tried accessing the cluster. However, when I ran kubectl get pods, I got the following error:

Error from server (Forbidden): pods is forbidden: User "system:anonymous" cannot list resource "pods" in API group "" in the namespace "default"

The thing that actually worked—and it was nuts!:

At first, I thought maybe port 6443 alone wasn’t enough for communicating with the master node, so I left it aside. But then something clicked: why was that port being used in the server block? I started investigating what kubectl actually sends when communicating with the cluster, and then I noticed the certificate data. That’s when I realized, “Oh, the client certificate is part of this too!” I then set up the reverse proxy to include the client certificate and key, and it actually worked.

# do echo decoded cert

echo [client-certificate-data] | base64 -d

# do echo decoded pvt key

echo [client-key-data] | base64 -d

# Corrected nginx conf

upstream internal-k8s-expose-temp {

server 10.10.10.100:6443;

}

server {

server_name myveryveryveryverylongsubdomain.mydomain.com;

location / {

proxy_pass https://internal-k8s-expose-temp;

# include proxy cert and key

proxy_ssl_certificate /etc/nginx/ssl/client.cert;

proxy_ssl_certificate_key /etc/nginx/ssl/client.key;

include proxy_params;

}

listen 443 http2;

ssl_certificate /etc/letsencrypt/live/myveryveryveryverylongsubdomain.mydomain.com/fullchain.pem; # managed by Certbot

ssl_certificate_key /etc/letsencrypt/live/myveryveryveryverylongsubdomain.mydomain.com/privkey.pem;

# managed by Certbot

}What was going on background

The issue was related to mutual TLS (mTLS) between NGINX and the Kubernetes cluster. The Kubernetes API server required a trusted client certificate for authentication, which was not provided when accessing the cluster directly using kubectl from a public network, causing access to be denied. By configuring NGINX with proxy_ssl_certificate and proxy_ssl_certificate_key, NGINX was able to authenticate itself to the Kubernetes API server via mTLS. This allowed NGINX to act as a reverse proxy, securely forwarding external requests (including those from kubectl) to the internal Kubernetes API, enabling access.

I passed the client certificate and client key from NGINX itself. Do I still need the client-certificate-data and client-key-data blocks in the kubeconfig?

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: [REDACTED]

server: https://myveryveryveryverylongsubdomain.mydomain.com/

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: [REDACTED]

client-key-data: [REDACTEDObviously, I tried removing those things. The new kubeconfig looks like this:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: [REDACTED]

server: https://myveryveryveryverylongsubdomain.mydomain.com/

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

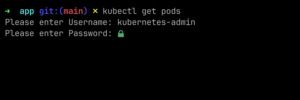

- name: kubernetes-adminI then tried the same thing, asking for the pods in the default namespace. For a moment, I felt relieved because it prompted me for a username and password. It meant that no one—literally not anyone—could access my cluster without my approval, and I felt safe.

Then, in another moment, I tried something basic—a random password. And oh no, that’s when I realized I had really messed up.

Do you still think you need my approval to use this playground?

What might be the best way, then?

In the next article, I’ll explain the best—or rather, the safest—way to expose your cluster. Or I might just say: don’t expose it at all! Stay tuned for my upcoming article. In the meantime, feel free to browse my other articles here: https://secnep.com

Or, if you’re specifically looking for sysadmin content, you can explore more here: https://secnep.com/category/sysadmins/

Your blog is a breath of fresh air in the often mundane world of online content. Your unique perspective and engaging writing style never fail to leave a lasting impression. Thank you for sharing your insights with us.

“Such a refreshing read! Your thorough approach and expert insights have made this topic so much clearer. Thank you for putting together such a comprehensive guide.”